by Philip D. Harvey, PhD; Michelle L. Miller, MS; Raeanne C. Moore, PhD; Colin A. Depp, PhD; Emma M. Parrish, BS; and Amy E. Pinkham, PhD

by Philip D. Harvey, PhD; Michelle L. Miller, MS; Raeanne C. Moore, PhD; Colin A. Depp, PhD; Emma M. Parrish, BS; and Amy E. Pinkham, PhD

Dr. Harvey is with the University of Miami Miller School of Medicine in Miami, Florida, and Research Service at Bruce W. Carter VA Medical Center in Miami, Florida. Ms. Miller is with the University of Miami Miller School of Medicine in Miami, Florida. Dr. Moore is with the Department of Psychiatry at the University of California in La Jolla, California. Dr. Depp is with the Department of Psychiatry at the University of California in La Jolla, California, and Veterans Affairs San Diego Healthcare System in La Jolla, California. Ms. Parrish is with the Joint Doctoral Program in Clinical Psychology at San Diego State University /University of California, San Diego. Dr. Pinkham is with the University of Texas at Dallas in Richardson, Texas, and the UT Southwestern Medical Center in Dallas, Texas.

FUNDING: The National Institute of Mental Health funded this study (RO1MH112620).

DISCLOSURES: Dr. Harvey has received consulting fees or travel reimbursements from Acadia Pharma, Alkermes, Bio Excel, Boehringer Ingelheim, Minerva Pharma, and Regeneron Pharma during the past year. He receives royalties from the Brief Assessment of Cognition in Schizophrenia. He is chief scientific officer of i-Function, Inc. He had a research grant from Takeda and from the Stanley Medical Research Foundation. Dr. Pinkham has served as a consultant to Roche Pharma. The other authors have no conflicts of interest relevant to the content of this article.

ABSTRACT: Objective. The development and deployment of technology-based assessments of clinical symptoms are increasing. This study used ecological momentary assessment (EMA) to examine clinical symptoms and relates these sampling results to structured clinical ratings.

Methods. Three times a day for 30 days, participants with bipolar disorder (n=71; BPI) or schizophrenia (n=102; SCZ) completed surveys assessing five psychosis-related and five mood symptoms, in addition to reporting their location and who they were with at the time of survey completion. Participants also completed Positive and Negative Syndrome Scale (PANSS) interviews with trained raters. Mixed-model repeated-measures (MMRM) analyses examined diagnostic effects and the convergence between clinical ratings and EMA sampling.

Results. In total, 12,406 EMA samples were collected, with 80-percent adherence to prompts. EMA-reported psychotic symptoms manifested substantial convergence with equivalent endpoint PANSS items. Patients with SCZ had more severe PANSS and EMA psychotic symptoms. There were no changes in symptom severity scores as a function of the number of previous assessments.

Conclusions. EMA surveyed clinical symptoms converged substantially with commonly used clinical rating scales in a large sample, with high adherence. This suggested that remote assessment of clinical symptoms is valid and practical and was not associated with alterations in symptoms as a function of reassessment, with additional benefits of “in the moment” sampling, such as eliminating recall bias and the need for informant reports.

Keywords: Schizophrenia, bipolar disorder, ecological momentary assessment, psychosis, depression

Innov Clin Neurosci. 2021;18(1–3):

Real-time assessment of clinical symptoms and functional behaviors via mobile devices is becoming more widely used in research and clinical settings.1,2 Previous studies have used a variety of methods to collect this data, variously referred to as ecological momentary assessment (EMA) or experience sampling method (ESM), with the over-riding term “digital biomarkers” now in wide use.3 Previous studies have employed timers plus diaries, personal assistant devices (PDAs), and smart devices, including smartphones and tablets.4,5 The benefits of connected smart-device developments include the possibility of tailoring the survey on a momentary basis depending on responses or context (e.g., launching a special survey or assessment contingent on a certain response: “event-contingent” responding) and adding additional prompts if the survey is not answered.

There are a variety of uses for EMA, including examination of geolocation, activities (social and nonsocial), and clinical symptoms.6–9 While many of the studies to date have been observational, these assessments can easily be used to deliver interventions and measure clinical outcomes in treatment studies.10–12 For their successful use in studies, several critical pieces of validity data will be required. The data points needed for these studies to meet regulatory approval will likely include adherence to sampling, validity of the responses, and convergence with in-person clinical assessment scales. A considerable amount of validity data has already been collected in diverse samples of participants with severe mental illness. For example, some studies have found that reports of psychosis, including hallucinations and delusions, collected via EMA, are convergent with the results of structured interviews targeting the same symptoms although these samples are sometimes small. Furthermore, geolocation information collected with passive assessment correlates quite suitably with participant-reported location, on the one hand, as well as with clinical ratings reflective of reduced motivation and activity.13,14 Finally, data collected with EMA, because it is momentary, is not affected by recall bias and appears to be considerably more valid than retrospective reports of everyday functioning and mood experiences.15

Some methodological concerns arise in the use of these methods. First, adherence must be sufficient to obtain a meaningful sample of experience during the sampling period. To that end, several studies have reported quite acceptable adherence rates for participants with severe mental illness, with response rates averaging well over 80 percent, and with participants who experience substantially greater cognitive impairment constituting the group providing fewer responses.7,16 Density of sampling seems important as well, and in a seeming paradox, some studies have suggested that more frequent daily or weekly sampling is associated with better adherence with some exceptions.17–20 It has been suggested that expecting prompts frequently might lead to greater willingness to respond. Data have suggested that using EMA, compared to paper and pencil assessments, leads to enhanced detection of signals and a lower number need to treat.21 Compensation for answering surveys on a survey-by-survey basis has also been shown to correlate with greater adherence than a flat rate for participation.7 It is also important to understand whether participation in EMA sampling in a treatment study has the potential to induce a placebo effect through an impact of self-monitoring. To date, several reviews have not found any potentially EMA-induced changes in symptoms reported by repeated EMA assessments across various follow-up periods.22–24 Finally, the duration of the sampling period is important. Clinical treatment studies will typically require at least a month of treatment, but sampling might need to be scheduled only at selected time points for studies of longer duration.

This paper presents the results of a study of the relationship of EMA-assessed clinical symptoms and clinical ratings collected with a structured clinical rating scale. Our study has several advantages. The sample size is large (173 participants) and includes participants with SCZ and BPI. Clinical assessments were collected at both the beginning and end of the 30-day EMA assessment period. Participants were sampled three times per day for 30 days and asked a series of questions about where they were, who they were with, and what they were doing, as well as questions targeting mood and psychotic symptoms. The activity-focused assessments are being published separately, and, here, we focused on the convergence between EMA-reported symptoms and clinically rated symptoms over the sampling period. Furthermore, we examined the convergence between EMA reports of being home versus away, alone versus with someone (where and who), and negative symptoms, as in our previous studies;7,20 we also examined the convergence between mood and psychosis symptoms and reported who and where data.25 This interest is based on our previous findings that being home and alone appear to correlate with increased suicidal ideation and decreased interest in social activities in a sample of 93 patients with SCZ sampled 10 times per day over one week.20 In that study, suicidal ideation, but not delusional thinking, was more common when participants reported they were alone. Among those participants, the adherence rate was 61 percent, but there was an average of 38 surveys per participant available for analysis.

We had several hypotheses that we tested in this study. First, we hypothesized that there would be specific correlations between EMA-assessed psychotic symptoms and their equivalent items on the PANSS at the endpoint assessment. We also hypothesized that that an increased proportion of surveys answered while home and alone would be associated with increased severity of negative symptoms of reduced emotional experience rated on the PANSS at the endpoint assessment. We also hypothesized that repeated sampling would not lead to changes in self-reported symptom severity. We also tested the idea that psychotic symptoms reported with EMA would be convergent with clinically assessed psychotic symptoms over the course of the assessment period, as it has been consistently reported that EMA reports of psychotic symptoms are associated with clinical ratings of severity across different symptoms, including delusions and hallucinations.8

Methods

Participants. Individuals aged 18 to 60 years who met Diagnostic and Statistical Manual of Mental Disorders, 5th Edition (DSM-V) criteria for schizophrenia (any subtype), schizoaffective disorder, or bipolar I or II disorder, with or without current or previous psychotic symptoms, participated in this study. Recruitment was conducted at three different sites: The University of Miami Miller School of Medicine (UM), The University of California, San Diego (UCSD), and The University of Texas at Dallas (UTD). UM patients were recruited from the Jackson Memorial Hospital-University of Miami Medical Center and the Miami VA Medical Center. UCSD patients were recruited from the UCSD Outpatient Psychiatric Services clinic, a large public mental health clinic, the San Diego VA Medical Center, other local community clinics, and via word of mouth. UTD patients were recruited from Metrocare Services, a nonprofit mental health services organization in Dallas County, Texas, and from other local clinics. The study was approved by the institutional review board (IRB) at UTD and endorsed by the local IRB at each of the sites. Diagnostic information was collected by trained interviewers using the Mini International Neuropsychiatric Interview (MINI) and the psychosis module from the DSM-V update of the Structured Clinical Interview for DSM Disorders (SCID), and a local consensus procedure was used to generate final diagnoses. All participants provided decisional capacity to consent and signed informed consent after receiving a complete description of the study.

Exclusion criteria. Exclusion criteria included: 1) history of or current medical or neurological disorders that might affect brain functioning (e.g., central nervous system [CNS] tumors, seizures, or loss of consciousness for over 15 minutes), 2) history of or current intellectual disability (intelligence quotient [IQ]<70, assessed via the Wide Range Achievement Test 3 [WRAT-3];26) or pervasive developmental disorder according to the DSM-IV criteria, 3) substance use disorder not in remission for at least six months, 4) visual or hearing impairments that interfere with assessment, and 5) lack of proficiency in English. Participants were also excluded if they had been hospitalized within the past six weeks or if they had any medication changes or dose changes greater than 20 percent within the past six weeks.

Symptoms assessment. Symptom severity was evaluated with the PANSS,27 which was administered in its entirety by trained raters. These raters had extensive experience working with patients with SCZ and BPI in previous studies, and all were trained to adequate reliability (intraclass correlations [ICCs]>0.80) by the study principal investigator (PI). The PANSS consists of 30 items with three subscales: seven items were the positive symptoms scale (P1–P7), seven items were the negative symptoms scale (N1–N7), and 16 items were general psychopathology symptoms scale (G1–G16). Each item was scored on a seven-point Likert scale ranging from 1 to 7. Thus, the range of the positive and the negative sub-scales were 7 to 49 while the range of the general psychopathology scale was 16 to 112.27 The PANSS was administered the day before the first EMA survey and immediately after the 30-day EMA period concluded.

Negative symptom models. A two-factor model of expression and experience was created and tested in several samples in a 2017 study by Khan et al.28 The model has also been examined for its link to functional outcomes,29–30 finding that reduced emotional experience was a better predictor of social outcomes than reduced emotional expression or total negative symptoms factor scores. The items in the PANSS Reduced Emotional Experience factor are: Emotional Withdrawal (N2), Passive/Apathetic Social Withdrawal (N4) and Active Social Avoidance (G16). The items in the PANSS Reduced Emotional Expression factor are: Blunted Affect (N1), Poor Rapport (N3), Lack of Spontaneity and Flow of Conversation (N6), and Motor Retardation (G7). As reduced emotional experience is the domain most consistently related to social functioning, we used this domain for correlational analyses with EMA-reported daily activities.

EMA procedures. All participants were provided with a Samsung Galaxy s8 smartphone with Android operating system (OS). Our software program sent weblinks of the EMA surveys to these phones three times per day, seven days per week, over a 30-day period, with data instantly uploaded to a Health Insurance Portability and Accountability Act (HIPAA)-compliant cloud-based data capture system (Amazon Web Services [AWS]). No data was stored on the device, and data was accessible to study staff in real time. The signals occurred at stratified random intervals that varied from day to day within, on average, 3.0-hour windows (with a 2-hour minimum) starting at approximately 9:00 a.m. and ending at 9:00 p.m. each day. The first and last daily assessment times were adjusted to accommodate each participant’s typical sleep and wake schedules. All responses were time-stamped, and the weblinks were only active within a one-hour period following the signal. A training session (typically <20 minutes) was provided on how to operate and charge the device and respond to surveys, including the meaning of all questions and response choices, and example surveys were conducted with participants to ensure proficiency with the smartphones. Participants were also contacted by telephone on the first and third day of EMA to troubleshoot and encourage adherence, and an on-call staff person was available to answer questions throughout the 30-day period.

EMA surveys were check-box questions asking about current location and behaviors. The first screen asked about the participant’s location (home vs. away) and the second queried about whether the participant was alone or with someone. If with someone, participants were next queried as to with whom. The subsequent screens were then customized to deliver home versus away and alone versus with someone queries tapping potential activities. These data are submitted in a separate report.

Questions about moods and symptoms since the last EMA query were also asked at each survey. These questions targeted mood characteristics as well as an occurrence and severity of psychotic symptoms. The psychosis items included hearing voices, paranoid ideation, mind reading (self or others), getting messages, and having special powers/abilities. Mood questions included sadness, relaxation, excitement, happiness, and anxiety. All symptom items were scaled with the same 1–7 severity scale as the PANSS. Symptom scores were reported as the severity score for each survey.

Data analyses. To perform a sophisticated examination of all of the survey data and the clinical ratings, we used a hierarchical linear modeling (HLM) approach, including a mixed model repeated measures analysis of variance (MMRM) strategy. We examined the course of the EMA rated psychotic symptoms over the assessment period, entering diagnosis as a between groups factor and day (1–30) and survey (1–3) as within subjects’ factors, using subject as a random intercept. We then added the where and who data, and their two-way interaction, from all of the EMA samples collected during the sampling period to examine possible influences of location and social context on symptoms measured across the 30-day survey protocol. We used the same analysis model to predict clinical ratings of reduced emotional experience at endpoint with diagnosis, the where and who data, and their two-way interaction.

We used a similar strategy to predict endpoint PANSS scores from the 30 days of sampled EMA items, linking those scores to each of the day x session EMA ratings. We predicted PANSS endpoint scores on hallucinations with the EMA hearing voices item and predicted the PANSS delusions item with receiving messages, having special powers, mind reading, and paranoid ideas. Similarly, we predicted PANSS suspiciousness with these same four delusion items. We entered diagnosis as a between-groups variable and day and survey as within subjects repeated measures.

For all MMRM analyses we performed similar tests: omnibus comparison of the fitted model to the intercept only model and significance tests for the factors and their interactions for the prediction of endpoint reduced emotional experience. Missing data were addressed with maximum likelihood methods. We created EM means considering the influences of the who, where, day, and survey repeated-measures variables. Using the same strategy, we performed additional analyses wherein we related all of the individual surveys regarding endorsement of the presence and severity of the five psychotic symptoms and five mood symptoms as related to the who, where, day, and survey repeated-measures factors.

To generate a more interpretable index of the correlation between EMA psychosis items and endpoint PANSS scores, we aggregated the scores on all observations for each of the items. We then used standard regression analyses to calculate a shared variance statistic, first entering the EMA items alone to predict the three critical PANSS items and then adding diagnosis as the second term in each regression analysis to look at total shared variance. Mean square of successive difference (MSSD), or the sum of consecutive observation differences squared, was calculated as a measure of within-person variability.31 This index was calculated as the average difference between successive EMA responses on the Psychotic symptoms on EMA. An example of this an index score of 1 would mean that the average difference between successive responses was 1 (12=1) and an average difference from item to item of 2 would yield a value of 4. Analyses were performed using IBM SPSS Statistics Version 26 (Generalized Linear Models module), with the exception of calculating MSSD, which was performed using R version 3.6.0.

Results

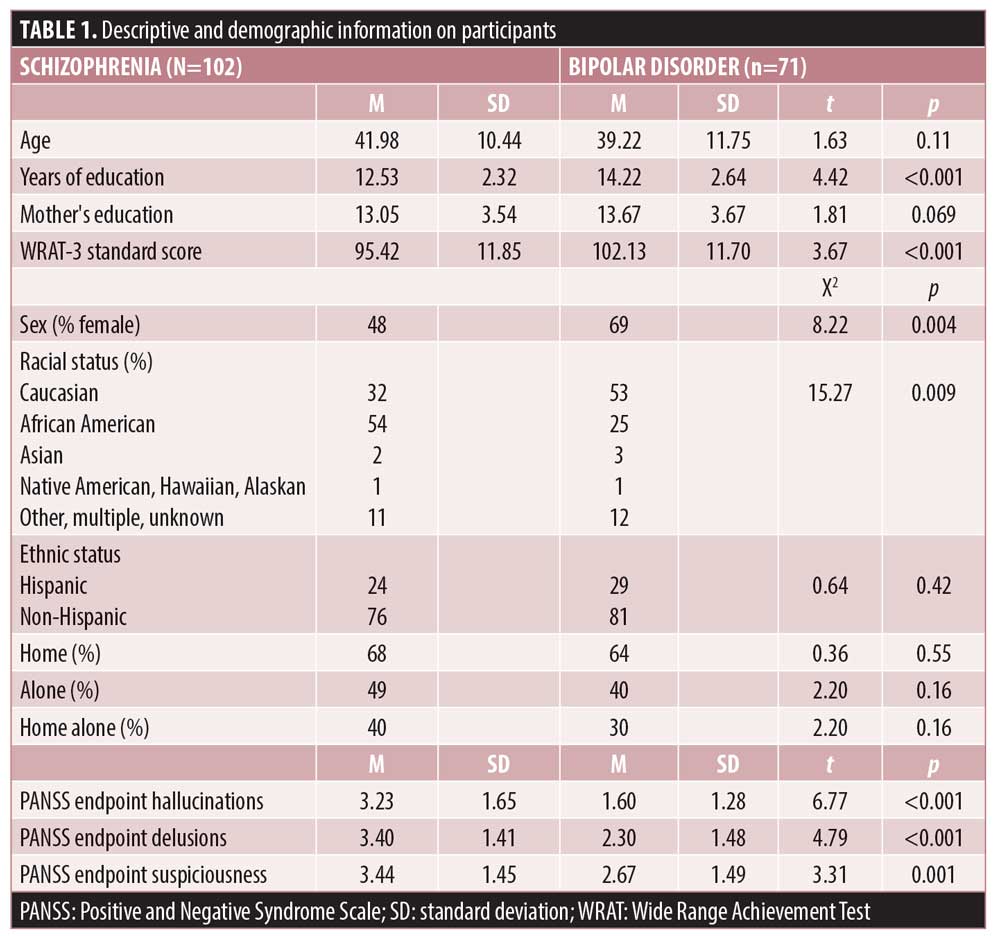

Table 1 present descriptive information on the participants in the study. There were no differences in age or maternal education across the groups and participants with SCZ had lower educational attainment and lower WRAT standard scores. There were more male individuals in the SCZ sample and more female individuals in the BPI sample, and there were some racial (but not ethnic) differences between the samples. PANSS scores were higher for all items in the SCZ sample than in the BPI sample.

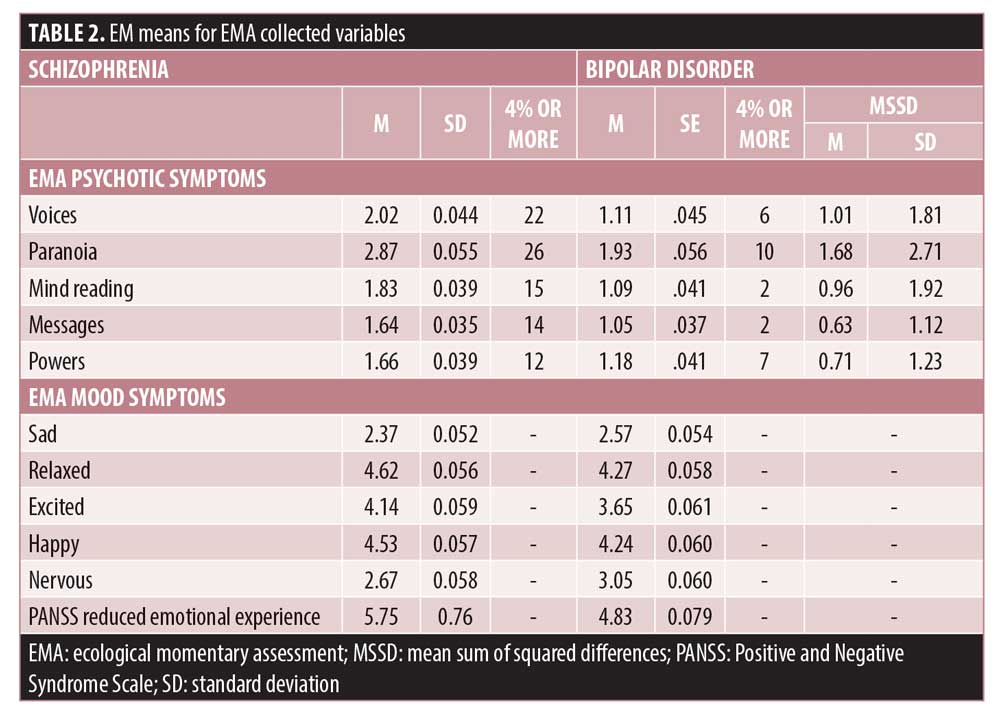

Table 2 presents the EM means for each item across the two diagnostic groups and Table 3 presents the HLM results predicting the EMA psychosis and mood items. There were 12,406 surveys completed. If every patient who initiated participation in the study had completed every assessment, 15,570 surveys would have been completed. Using that best-case estimate, the observed rate of adherence was 80 percent after exclusion of all participants who did not complete the PANSS at endpoint.

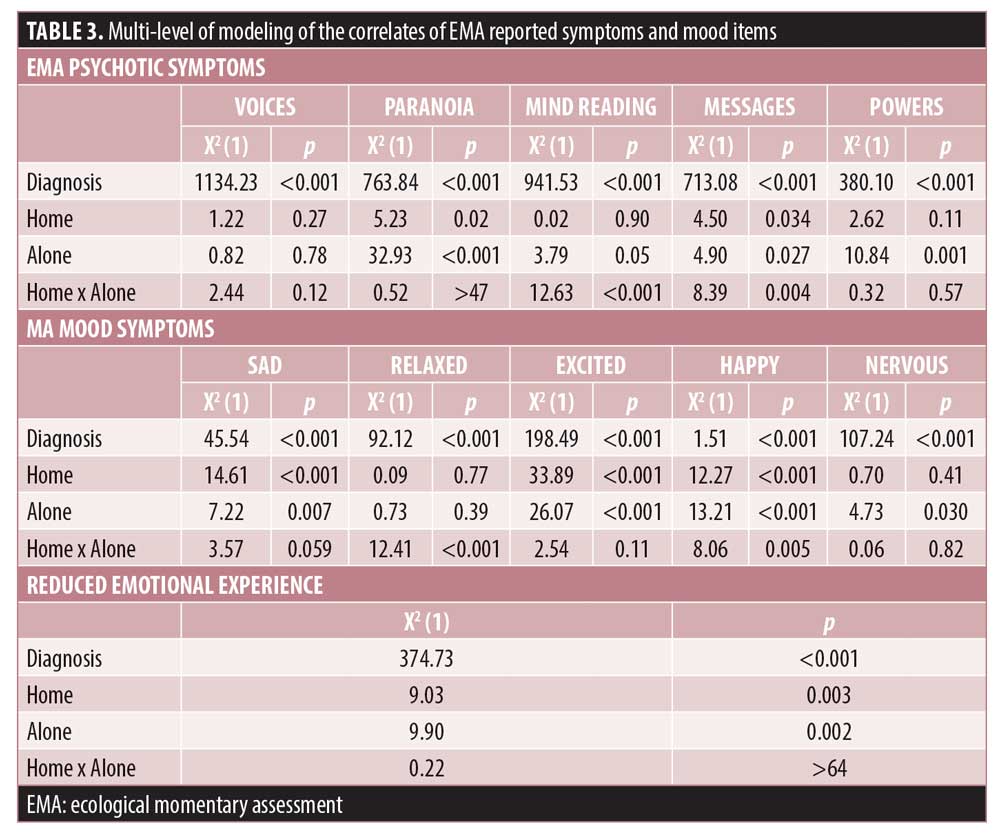

Modeling of symptom and mood scores over the protocol. Every one of the symptom models was a better to fit to the data than the intercept only model: all X2 (32)>90.91, all p<0.001. As can be seen in Table 2, there were substantial diagnostic effects for all psychosis items, with participants with SCZ having higher scores similar to those seen on the endpoint PANSS items that correspond to these items.

Day and session repeated-measures. Across all of the symptoms, the effects of day (1–30) were not significant, all X2 (29)<40.51, all p>0.10, meaning that there were no significant overall changes in these items over the 30-day assessment period. See Figure 1 for a graphical depiction of three of the items over the 30-day protocol. There were time of day effects only for relaxation, X2 (2)=16.54, p<0.001, and anxiety, X2 (2)=7.47, p=0.024. Relaxation was reported to be lower in the mornings and anxiety was highest at the mid-day assessment.

When we calculated the MSSD for the five psychosis items, the general level of variability was low. When the MSSD was compared across symptoms with a repeated-measures Analysis of variance, the overall effect was significant, F(4,171)=14.84, p<0.001. Post-hoc tests revealed that paranoia was more variable than hallucinations and mind reading (P=0.003), which in turn were more variable than receiving messages and having special powers, p=0.002. Thus, average variance in the items was consistent with the overall severity of the symptoms and the number of reports of symptoms with severity greater than 3.

Location and social context effects. There were no effects of being home or alone for hallucinations, and there was a significant effect of being alone for all of the delusion items. Being home was associated with higher severity of paranoia and receiving messages, and being home alone was specifically associated with mind reading and receiving messages. In terms of mood symptoms, patients with BPI reported more sadness and nervousness, and patients with SCZ reported more relaxation, excitement, and happiness. Being away from home and with someone was associated with more excitement and happiness, while being alone was associated with more sadness and nervousness. The two-way interaction for relaxation was quite unique, in that patients who were home alone and away with someone both reported the highest levels of relaxation. Reduced emotional experience was more severe in patients with SCZ and higher scores were associated with being alone and being home, but there was no interaction of home x alone.

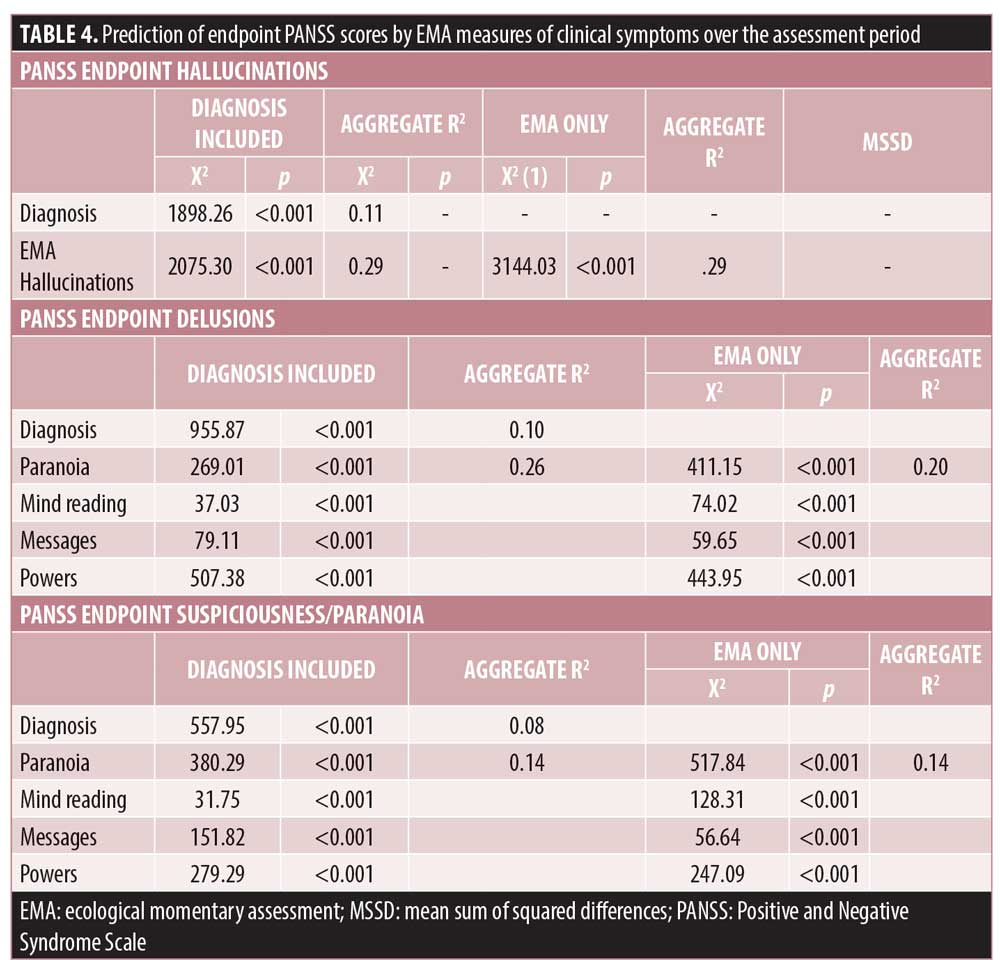

Multi-level modeling predicting PANSS scores. Table 4 presents the results of the MMRM models predicting endpoint PANSS scores. The models are presented with and without diagnosis as a between group factors. Again, there were no significant effects of day or time of day on the association between EMA reported symptoms and endpoint PANSS scores, all X2(29)<33.44, all p>0.80. For analyses both with and without diagnoses included, the EMA variables were significantly related with endpoint PANSS scores. For the EMA 4 psychosis items involving delusional thinking, all four items were significantly related to the PANSS endpoint items delusions and suspiciousness/unusual thought content.

As can be seen in Table 4, we also calculated variance accounted for statistics using regression analysis relating the aggregate of all EMA observations over the protocol and the PANSS items. Hallucinations shared the most variance with PANSS scores and the EMA delusion and suspiciousness items shared considerable variance with PANSS ratings, despite the relatively low average PANSS scores for participants with BPI.

Discussion

In this study, EMA assessments of clinical symptoms of psychosis manifested considerable convergence with in-person clinical ratings based on the PANSS. These results provide support for the validity of EMA symptom assessments in both SCZ and BPI illness, with demonstration of expected diagnostic effects. We also found variation in both mood states and psychotic symptoms that converge with where the patients were and who they were with. These data also suggest that repeated sampling of symptoms, using EMA up to 90 times in 30 days, does not lead to any substantial alterations in reports of symptom severity (as evidenced by no significant effects of day over up to 90 observations). In contrast to placebo effects arising from repeated in-person assessments of negative symptoms in SCZ or PTSD symptoms, there is nothing that could be construed as a placebo, exposure, or treatment effect in this large sample of EMA reports.32–35 In fact, that day-to-day variation in mean EMA symptom scores was less than 0.5 points, and the endpoint scores for all symptoms, in both groups, were essentially identical to Day 1 scores. Average day- to-day variation was 1 point or less on average for 4 of the 5 items. For the item with the most trial-to-trial variation, suspiciousness, there were more cases in both samples who had scores in the clinically significant range at one or more of the observations and these participants could have fluctuated in their severity reports.

Consistent with our previous study, with a SCZ-only sample and half the number of participants (7) being home and/or alone consistently across surveys was associated substantially with higher clinical ratings of reduced emotional experience. Certain psychotic symptoms (e.g., having paranoid thoughts, and feelings of being able to read others’ minds) were also more likely when participants were home, alone, or both. These data highlight the potential detrimental effects of social isolation and point to a potential therapeutic role of social and community engagement. Thus, EMA assessment data could be used to trigger either additional assessments or therapeutic interventions.

The data presented here also strongly argue against the idea that people with severe mental illness would be reluctant to report psychotic symptoms in a daily assessment paradigm. In fact, there were a large number of surveys (over 2,200) where participants reported clinically significant hallucinations or paranoid ideation. Despite diagnostic differences in symptom severity, associations between EMA reported symptoms and PANSS scores were considerable and the effects of being home and/or alone hold up even after consideration of diagnosis. These data suggest the feasibility of EMA sampling to assess both positive and negative psychotic symptoms, with 80 percent of all possible surveys answered. Furthermore, the convergence between EMA surveys answered by participants with clinical ratings suggested that even if the symptom severity reported via EMA was less than symptom severity reported via the PANSS, the rank order similarity was strong. One would expect that highly trained, and reliability checked PANSS raters might generate a wider range of scores than patients with SMI who are simply asked to report on their experiences without extensive training in severity anchor points. The reduced emotional experience scores also overlapped substantially with patients where and who reports elicited with EMA.

The limitations of the study include not selecting participants on the basis of either substantial psychotic or negative symptoms. Overall symptom severity on the psychosis items from the PANSS was moderate or less, as was severity on the reduced emotional experience items. Patients with BPI had considerably lower severity scores on all psychosis items. There are alternative strategies to assess negative symptoms more comprehensively, including specialized rating scales. Adherence was not perfect, but general levels of adherence were excellent, considering the SMI population,36–38 and EMA-reported clinical symptom data were only missing for 0.03 percent of surveys where a where and who response was made.

These data suggest that EMA could be a valuable adjunct for treatment studies, including psychosocial and pharmacological approaches. Previous studies of participants with SCZ have used EMA sampling to quantify the time course of emotional and hedonic responses, highlighting the usefulness of highly time-linked data that can be linked to ongoing experiences and sampled repeatedly over time.39 The tremendous flexibility of technology can be harnessed to allow for targeted queries based on geolocation and to deliver interventions accordingly. Future reports from these studies will provide information on activities engaged in and the impact of geolocation on cognitive test performance and self-assessment of cognitive capabilities. The data also suggest that sampling data from one week in a month-long follow-up period provide essentially identical correlations with endpoint PANSS scores compared with daily samples. Thus, longer studies with sampling distributed across the study period would seem to be feasible, with sampling assessments occurring only for a selected set of the study weeks.

References

- Firth J, Torous J, Yung AR. Ecological momentary assessment and beyond: the rising interest in e-mental health research. J Psychiatr Res. 2016;80:3–4.

- Mote J, Fulford D. Ecological momentary assessment of everyday social experiences of people with schizophrenia: a systematic review. Schizophr Res. 2019;S0920-9964(19)30428-1.

- Depp CA, Bashem J, Moore RC, et al. GPS mobility as a digital biomarker of negative symptoms in schizophrenia: a case control study. NPJ Digit Med. 2019; 2:108

- Stone AA, Shiffman S, Schwartz JE, et al. Patient compliance with paper and electronic diaries. Control Clin Trials. 2003; 24(2):182–199.

- Kimhy D, Delespaul P, Corcoran C, et al. Computerized experience sampling method (ESMc): assessing feasibility and validity among individuals with schizophrenia. J Psychiatr Res. 2006; 40(3):221–230.

- Brusilovskiy E, Klein LA, Salzer MS. Using global positioning systems to study health-related mobility and participation. Soc Sci Med. 2016;161:134–142.

- Granholm E, Holden JL, Mikhael T, et al. What do people with schizophrenia do all day? Ecological momentary assessment of real-world functioning in schizophrenia. Schizophr Bull. 2020;46(2):242–251.

- Myin-Germeys I, Oorschot M, Collip D, et al. Experience sampling research in psychopathology: opening the black box of daily life. Psychol Med. 2009; 39(9):1533–1547.

- Oorschot M, Lataster T, Thewissen V, et al. Symptomatic remission in psychosis and real-life functioning. Br J Psychiatry. 2012;201(3):215–220.

- Ben-Zeev D, Brenner CJ, Begale M, et al. Feasibility, acceptability, and preliminary efficacy of a smartphone intervention for schizophrenia. Schizophr Bull. 2014;40(6):

1244–1253 - Bell IH, Fielding-Smith SF, Hayward M, et al. Smartphone-based ecological momentary assessment and intervention in a coping-focused intervention for hearing voices (SAVVy): study protocol for a pilot randomised controlled trial. Trials. 2018;19(1):262.

- Verhagen SJ, Hasmi L, Drukker M et al. Use of the experience sampling method in the context of clinical trials. Evid Based Ment Health. 2016;19(3):86–89.

- Oorschot M, Lataster T, Thewissen V, et al. Mobile assessment in schizophrenia: a data-driven momentary approach. Schizophr Bull. 2012;38(3):405–413.

- Ben-Zeev D, Morris S, Swendsen J, et al. Predicting the occurrence, conviction, distress, and disruption of different delusional experiences in the daily life of people with schizophrenia. Schizophr Bull. 2011;38(4):

826–837. - Ben-Zeev D, McHugo GJ, Xie H, et al. Comparing retrospective reports to real-time/real-place mobile assessments in individuals with schizophrenia and a nonclinical Comparison group. Schizophr Bull. 2012; 38(3):396–404.

- Granholm E, Loh C, Swendsen J. Feasibility and validity of computerized ecological momentary assessment in schizophrenia. Schizophr Bull. 2008;34(3):507–514.

- Depp CA, Thompson WK, Frank E, et al. Prediction of near-term increases in suicidal ideation in recently depressed patients with bipolar II disorder using intensive longitudinal data. J Affect Disord. 2017;208:363–368.

- Depp CA, Kim DH, de Dios LV et al. A pilot study of mood ratings captured by mobile phone versus paper-and-pencil mood charts in bipolar disorder. J Dual Diagn. 2012;8(4):326–332.

- Thompson WK, Gershon A, O’Hara R et al. The prediction of study-emergent suicidal ideation in bipolar disorder: a pilot study using ecological momentary assessment data. Bipolar Disord. 2014;16(7):669–677.

- Depp CA, Moore RC, Perivoliotis D et al. Social behavior, interaction appraisals, and suicidal ideation in schizophrenia: The dangers of being alone. Schizophr Res. 2016;172(1-3):195–200.

- Moore RC, Depp CA, Wetherell JL, et al. Ecological momentary assessment versus standard assessment instruments for measuring mindfulness, depressed mood, and anxiety among older adults. J Psychiatr Res. 2016;75:116–123.

- Santangelo PS, Ebner-Priemer UW, Trull TJ, et al. Experience sampling methods in clinical psychology in: The Oxford handbook of Research Strategies for Clinical Psychology. (Ed.) JS Comer, PC Kendall. Oxford, United Kingdom: Oxford University Press; 2013:88–210.

- Ebner-Priemer UW, Trull TJ. Ecological momentary assessment of mood disorders and mood dysregulation. Psychol Assess. 2009;21(4):463–475.

- Mofsen AM, Rodebaugh TL, Nicol GE, et al. When all else fails, listen to the patient: a viewpoint on the use of ecological momentary assessment in clinical trials. JMIR Ment Health. 2019;6(5):e11845.

- Delespaul P, deVries M, van Os J. Determinants of occurrence and recovery from hallucinations in daily life. Soc Psychiatry Psychiatr Epidemiol. 2002;37(3):97–104.

- Jastak S: Wide-Range Achievement Test, 3rd ed. San Antonio, TX, Wide Range, Inc, 1993

- Kay SR, Fiszbein A, Opler LA: The positive and negative syndrome scale (PANSS) for schizophrenia. Schizophr Bull. 1987;13(2):

261–276. - Khan A, Liharska L, Harvey PD, et al. Negative symptom dimensions of the positive and negative syndrome scale across geographical regions: implications for social, linguistic, and cultural consistency. Innov Clin Neruosci. 2017;14:30–40.

- Harvey PD, Khan A, Keefe RSE. Using the positive and negative syndrome scale (PANSS) to define different domains of negative symptoms: prediction of everyday functioning by impairments in emotional expression and emotional experience. Innov Clin Neurosci. 2017;14:18–22.

- Strassnig MT, Bowie CR, Pinkham AE, et al. Which levels of cognitive impairments and negative symptoms are related to functional deficits in schizophrenia? J Psychiatr Res. 2018;104:124–129.

- Jahng S, Wood PK, Trull TJ. Analysis of affective instability in ecological momentary assessment: Indices using successive difference and group comparison via multilevel modeling. Psychol Methods. 2008;13(4):354–375.

- Correll CU, Davis RE, Weingart M, et al. Efficacy and safety of lumateperone for treatment of schizophrenia: a randomized clinical trial. JAMA Psychiatry. 2020;77(4):349–358.

- Bugarski-Kirola D, Iwata N, Sameljak S, et al. Efficacy and safety of adjunctive bitopertin versus placebo in patients with suboptimally controlled symptoms of schizophrenia treated with antipsychotics: results from three phase 3, randomised, double-blind, parallel-group, placebo-controlled, multicentre studies in the SearchLyte clinical trial programme. Lancet Psychiat. 2016;3(12):1115–1128.

- Hodgins GE, Blommel JG, Dunlop BW, et al. Placebo effects across self-report, clinician rating, and objective performance tasks among women with post-traumatic stress disorder: investigation of placebo response in a pharmacological rreatment study of post-traumatic stress disorder. J Clin Psychopharmacol. 2018;38(3):200–206.

- Dunlop BW, Binder EB, Iosifescu D, et al. Corticotropin-releasing factor receptor 1 antagonism is ineffective for women with posttraumatic stress disorder. Biol Psychiatry. 2017;82(12):866–874.

- Kirkpatrick B, Strauss GP, Nguyen L, et al. The brief negative symptom scale: psychometric properties. Schizophr Bull. 2011;37(2):300–305.

- Raskin A, Pelchat R, Sood R, et al. Negative symptom assessment of chronic schizophrenia patients. Schizophr Bull. 1993;19(3):627–635.

- Horan WP, Kring AM, Gur RE, et al. Development and psychometric validation of the clinical assessment interview for negative symptoms (CAINS). Schizophr Res. 2011;132(2–3):

140–145. - Strauss GP, Esfahlani FZ, Granholm E, et al. Mathematically modeling anhedonia in schizophrenia: a stochastic dynamical systems approach. Schizophr Bull. 2020;sbaa014. Online ahead of print.