by Thomas M. Shiovitz, MD; Charles S. Wilcox, PhD, MPA, MBA; Lilit Gevorgyan, BS; and Adnan Shawkat, BA

by Thomas M. Shiovitz, MD; Charles S. Wilcox, PhD, MPA, MBA; Lilit Gevorgyan, BS; and Adnan Shawkat, BA

Dr. Shiovitz is from CTSdatabase, LLC, in Beverly Hills, California and California Neuroscience Research Medical Group, Inc., Sherman Oaks, California; Dr. Wilcox is from Pharmacology Research Institute, Los Alamitos, California; Ms. Gevorgyan is from California Neuroscience Research Medical Group, Inc., Sherman Oaks, and Mr. Shawkat is from CTSdatabase, LLC, in Beverly Hills, California.

Innov Clin Neurosci. 2013;10(2):17–21

Funding: There was no funding for the development and writing of this article.

Financial Disclosures: Dr. Shiovitz is president of CTSdatabase, LLC, the database used in this study. Dr. Wilcox, Ms. Gevorgyan, and Mr. Shawkat have no conflicts of interest relevant to the content of this article.

Keywords: Duplicate subjects, professional subjects, professional patients, site cooperation, investigator collaboration, subject database, subject registry

Abstract: Objective: To report the results of the first 1,132 subjects in a pilot project where local central nervous system trial sites collaborated in the use of a subject database to identify potential duplicate subjects. Method: Central nervous system sites in Los Angeles and Orange County, California, were contacted by the lead author to seek participation in the project. CTSdatabase, a central nervous system-focused trial subject registry, was utilized to track potential subjects at pre-screen. Subjects signed an institutional review board-approved authorization prior to participation, and site staff entered their identifiers by accessing a website. Sites were prompted to communicate with each other or with the database administrator when a match occurred between a newly entered subject and a subject already in the database.

Results: Between October 30, 2011, and August 31, 2012, 1,132 subjects were entered at nine central nervous system sites. Subjects continue to be entered, and more sites are anticipated to begin participation by the time of publication. Initially, there were concerns at a few sites over patient acceptance, financial implications, and/or legal and privacy issues, but these were eventually overcome. Patient acceptance was estimated to be above 95 percent. Duplicate Subjects (those that matched several key identifiers with subjects at different sites) made up 7.78 percent of the sample and Certain Duplicates (matching identifiers with a greater than 1 in 10 million likelihood of occurring by chance in the general population) accounted for 3.45 percent of pre-screens entered into the database. Many of these certain duplicates were not consented for studies because of the information provided by the registry. Conclusion: The use of a clinical trial subject registry and cooperation between central nervous system trial sites can reduce the number of duplicate and professional subjects entering clinical trials. To be fully effective, a trial subject database could be integrated into protocols across pharmaceutical companies, thereby mandating site participation and increasing the likelihood that duplicate subjects will be removed before they enter (and negatively affect) clinical trials.

Introduction

Duplicate and professional subjects are a significant problem in clinical trials and likely contribute to failed central nervous system (CNS) studies.[1] Evident wherever there is a high density of study sites, duplicate subjects have been reported in California, Florida, Pennsylvania, New York, and several other states.[2,3]

Pharmaceutical companies that track subjects within the development program of a single compound have reported duplicates in the range of 1.5 and 5 percent.[4] While there have been reports of Phase I sites in Florida tracking potential subjects across a few local sites, until now there was no mechanism to track CNS subjects across sponsors or between sites.[5]

Frustrated by growing numbers of duplicate and professional subjects, several Los Angeles-area CNS sites agreed to register prescreens into a database designed to track potential CNS study subjects. These competitor sites were also willing to rapidly communicate with one another to determine whether matched subjects were actually entered into studies at other sites.

Methods

We adapted CTSdatabase, a trial subject registry initially designed for use in CNS protocols, to provide information on newly screened subjects, to track pre-screened patients in Southern California.

Site investigators were initially contacted by the lead author and asked to participate in the database. After signing a confidentiality agreement, investigators were provided with a template Subject Database Authorization Form (“Authorization”), which needed to be approved by a local institutional review board (IRB). Subsequent to IRB approval, staff from CTSdatabase would provide appropriate site staff with training on the web-based system, usually in the form of a webinar, and secure login information.

Potential subjects (“subjects”) were required to provide picture identification and sign the Authorization prior to their data being entered into the database. Site staff would then access the website and login. The database would capture the date and the zip code of the entering site as well as the partial identifiers authorized by subjects and entered by site staff.

The proprietary database algorithm compared entered subjects to other entries in the database and determined the likelihood of the newly entered subject being a true match to an existing subject. Less than certain matches were included in the report to the site in order to try to pick up a pattern in those subjects who might try to “game” the system and provide wrong identifiers. The site would receive an immediate printout, detailing a possible, probable, or virtually certain (“certain”) match. A possible match has approximately 1 in 100,000 likelihood of being by chance, a probable match 1 in 1 million, and a certain match 1 in 10 million.

The next step involved more investigator collaboration. If the subject was a virtually certain match, the other site needed to be contacted to determine if the prescreen was actually entered into a study and, if entered, when the subject completed the study. Study-specific or sponsor-specific information was not shared between sites.

If the subject was a possible or probable (and not a certain) match, CTSdatabase staff were contacted to determine whether this was a true match or a “false positive.” For example, if the subject only differed by last four digits of the Social Security Number (SSN), but had the same initials, date of birth (DOB), gender, height, and weight, it was likely a true match. Conversely, a match of SSN, gender, and initials with the year of birth or weight varying by a large margin made a true match unlikely. The decision to actually consent a subject for a study was always left to the site investigator.

The first subjects were entered at California Neuroscience Research on October 30, 2011, and additional sites were added over the next nine months. Currently, 1,132 subjects have been entered at nine sites in Los Angeles and Orange County as part of this pilot project. Several additional sites and many more subjects are expected to participate by the time of publication.

Results were obtained by searching the database for number of subjects entered, number and type of matches and number of active sites using the database during any given month. In addition, anecdotal information was captured regarding patient and site attitudes toward participating in the database project.

Results

The potential subjects, of which there were 1,132, were entered into the database. The overwhelming majority of subjects entered were for prescreens in depression (66.3%) and schizophrenia (24.9%). Bipolar prescreens made up 4.2 percent of the sample, while anxiety, obsessive compulsive disorder (OCD), migraine, and “other” prescreens were in smaller numbers.

A number of certain matches (n=29) were likely due to the re-entry of the same subject at the same site, days or months apart, when a subject did not initially qualify for a study and was brought back at a later date. This number was offset by the number of subjects who were immediately deterred by reading or knowing about the Authorization (i.e., they either showed for a pre-screen and were never entered or they were told over the phone that they would be entered into a database and never showed). Regardless, for the purposes of this paper, we have removed matching subjects who were re-entered at the same site from the data set, reporting only duplicate subjects—those that matched identifiers with subjects at another site.

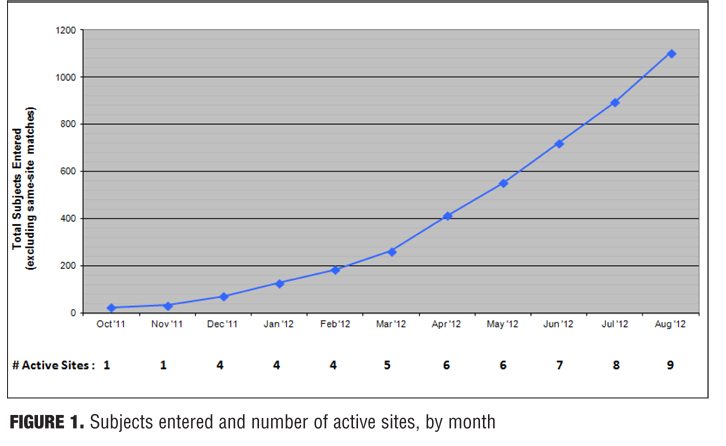

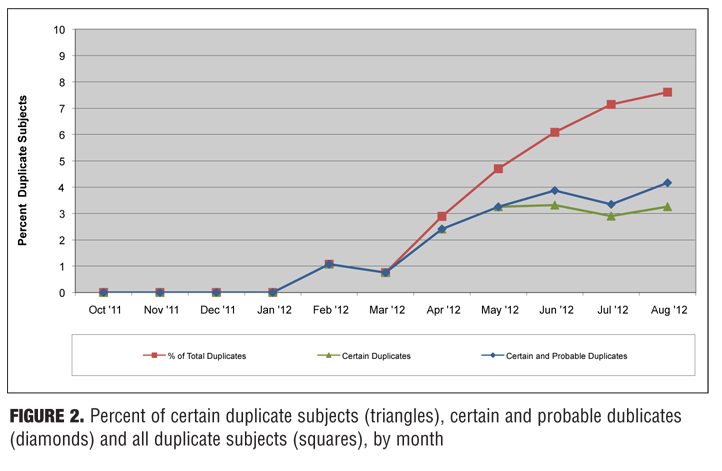

Of 1,132 subjects entered (excluding the 29 who were re-entered at the same site), 86 total duplicates were found. Thirty-eight of these were found to be certain duplicates, 10 were found to be probable duplicates, and 38 were found to be possible duplicates. When sites were contacted and identifiers were checked, almost all of the possible and some of the probable matches were either clearly not the same subject or there was not enough information to consider them true duplicates. In contrast, all virtually certain matches (with the exception of one case where it was ambiguous) clearly appeared to be the same subject. Figure 1 shows the cumulative number of subjects entered and the number of participating sites, by month. Figure 2 shows the percentage of certain duplicates, certain plus probable duplicates, and total duplicates (i.e., certain, probable, and possible duplicates) by month.

The overall number of certain duplicates (i.e., true duplicates that do not include any possible and probable matches) was 3.45 percent of entered subjects. When probable matches were included, this increased to 4.35 percent.

The overwhelming majority of all subjects (i.e., 190/193 at CNR and 395/405 at PRI) did not have an issue with providing identifiers and signing the Authorization. Nonetheless, at CNR, for example, one potential subject left to take a phone call and never returned, one ripped up the Authorization, and another took all prescreen paperwork with him and abruptly left the office. At PRI, four persons did not want to disclose the last four digits of their SSN and six others abruptly left the office when reviewing prescreen paperwork. We have also observed other potential subjects who “came clean” about prior study participation that they had previously denied when presented with the prospect of being entered into the database.

Potential sites varied as to how enthusiastic they were toward database participation and collaboration; most were eager to cooperate. Some sites initially cited legal/privacy issues while others cited concerns over patient acceptance or financial implications (i.e., not being paid for their prescreen work if subjects are excluded before they signed a study-specific informed consent) as a source of their hesitancy. Most of these concerns were eventually resolved with explanations about database Health Insurance Portability and Accountability Act (HIPAA) protections and potential long-term negative financial effects of taking no action to ensure subject quality. In one instance, a compromise was reached to allow one site to not obtain the last four of SSN and still use the database.

Discussion

Investigators are usually independent practitioners or part of independent research facilities and are not used to collaborate with other sites except (somewhat symbolically) at investigator meetings. In fact, extensive PubMed and Google Scholar searches of investigator/site and cooperation/collaboration yielded no results (searched August 1, 2012).

Local investigators are competitors whom often must share studies and potential patient populations with one another. Like competing pharmaceutical companies, information regarding patient populations, enrollments, and study participation are not shared because of privacy and financial concerns.[6]

In tandem with the expanded use of contract research organizations (CROs), multiple vendors, and centralized raters, investigators have become more marginalized and have less direct input into the design and execution of studies.[7] At the same time, CNS investigators have watched as placebo-response rates have increased, treatment effect has decreased, and studies have become increasingly more complex.[8–11]

One of the contributors to failed CNS studies is likely duplicate and so-called professional subjects.[1] The rise in professional subjects may be due, in part, to the poor economy, the rise of the internet, and a culture of dishonesty.[12,13] The increasing duration and complexity of study visits likely contribute to this heightened dishonesty as participants in studies tend to cheat more when the task is “taxing and depleting.”[14] In addition, far more complicated entrance criteria make it so that these professionals may have a competitive edge over bona fide (and comparatively naïve) CNS patients.[7,15]

This pilot project was bolstered by a strong desire among Los Angeles-area CNS investigators to actively work toward reversing the increasing trend of failed studies (while also mitigating against continuing investigator marginalization). Furthermore, use of a clinical trial subject registry places a focus on the nature of the subject in clinical trials. Other methods that seek to reduce the incidence of failed studies, such as rater training or centralized raters, do not address this issue.

Poor subjects (participating in multiple studies, answering questions dishonestly, and/or not taking the study medication) who are rated perfectly are still a huge liability for any study.

This pilot project had many limitations. Not all area sites participated, and those that did, started slowly. Only a few sites participated in the early months, and several others have just recently started entering subjects. Furthermore, the database is prospective and subjects seen prior to site participation in the pilot project did not sign an Authorization and therefore could not be included in the database.

In addition, professional patients, who can access websites to learn how to participate in more than one study at a time,16 can easily shift to nonparticipating sites or other geographic locations and, for now, avoid the registry.

This pilot project was conducted exclusively within the Los Angeles/Orange County area, which has one of the largest population centers (and therefore a large density of study sites and a potentially high percentage of duplicates).

Admittedly, only some of the CNS sites in southern California were included; if additional CNS sites were included, the number of matches found would certainly have been higher. Moreover, if additional, non-CNS sites were included, the number would be higher still. Our program would not detect a subject simultaneously participating in an allergy, fibromyalgia, or pain study, for example, while prescreening for a depression study at one of our participating sites.

In addition, sites themselves could choose not to participate in this initiative. In fact, some of the nonparticipating sites may have seen an initial increase in their numbers of subjects enrolled as a result of those who matched at (or avoided) sites that used the registry.

Also, because data were entered pre-screen (i.e., not entered following informed consent and at the last subject contact, which is how the database is designed), sites were forced to contact each other or the database administrator in order to determine whether a subject was actually entered into and completed a study. While fostering inter-site cooperation, this caused a short delay in sites getting the information they needed to make a decision about whether to screen the subject—and this became more complex as the number of participating sites increased.

Conclusion

Site collaboration and use of a trial subject registry certainly reduced the number of duplicate subjects entering studies from participating sites; however, the effect on the overall number of duplicate subjects entering CNS studies is unknown. Only a modest number of CNS sites participated in only one region of the country. Subjects were still free to “shift” to nonparticipating sites or enter protocols in other regions or in non-CNS indications.

In this pilot study, 3.45 percent of prescreens were identified as certain duplicates (and presumably excluded from double-blind study participation). When probable duplicates were included, this number increased to 4.35 percent. While this percentage cannot be extrapolated to quantify the overall effect of duplicate and professional subjects on failed CNS studies, it is clear that an effect exists and that it is substantial.

In order to truly be effective, a clinical trial subject database should be integrated into CNS protocols, thereby mandating participation from all sites. Furthermore, a subject registry should be nationwide and must have participation across all pharmaceutical companies in order to more effectively prevent professional subjects from enrolling in (and contaminating) the results of studies from nonparticipating sponsors.

References

1. Alphs L, Benadetti F, Fleishhacher W, Kane JM. Placebo-related effects in clinical trials in schizophrenia: what is driving this phenomenon and what can be done to minimize it? Int J Neuropsychopharm. 2012;15:1003–1014.

2. Hanson E, Hudgins B, Tyler D, et al. A method to identify subjects who attempt to enroll in more than 1 trial within a drug development program and a preliminary review of subject characteristics. Presented at CNS Summit. Nov 2012, Boca Raton, FL.

3. Council for Clinical Research Subject Safety and Data Integrity. Pervasive fraud in the clinical trial world. Video, 2010. http://www.youtube.com/watch?v=m_k-ktAgBBU. Accessed 27 August 2012.

4. Shiovitz TM, Zarrow ME, Shiovitz AM, Bystritsky AM. Failure rate and “professional subjects” in clinical trials of major depressive disorder. Letter to the editor. J Clin Psychiatry. 2011;72(9):1284.

5. Resnik DB, Koski G. A national registry for healthy volunteers in phase 1 clinical trials. JAMA. 2011;305(12).

6. Khin NA, Chen Y-F, Yang Y, et al. Failure rate and “professional subjects” in clinical trials of major depressive disorder. Letter in reply. J Clin Psychiatry. 2011;72(9):1284.

7. Shiovitz TM, Zarrow ME, Sambunaris A, Gevorgyan L. Subjects in CNS clinical trials poorly reflect patient population. Poster presentation. CNS Summit. Nov 2012, Boca Raton, FL.

8. Khin NA, Chen YF, Yang Y, et al. Exploratory analyses of efficacy data from major depressive disorder trials. J Clin Psych. 2011;72(4):464–472.

9. Khin NA, Chen YF, Yang Y, et al. Exploratory analysis of efficacy data from schizophrenia trials in support of new drug applications submitted to the US Food and Drug Administration. J Clin Psych. 2012;73(6):856–864.

10. Kemp AS, Schooler NR, Kalali AH, et al. What is causing the reduced drug-placebo difference in recent schizophrenia clinical trials and what can be done about it? Schizophr Bull. 2010;36(3):504–509.

11. Getz KA, Wenger J, Campo RA, et al. Assessing the impact of protocol design changes on clinical trial performance. Am J Ther. 2008;15(5):450–457.

12. Bramstedt KA. Recruiting healthy volunteers for research participation via internet advertising. Clin Med Res. 2007;5(2):91–97.

13. Callahan D. The Cheating Culture: Why More Americans are Doing Wrong to Get Ahead. Orlando, FL: Harcourt; 2004.

14. Ariely D. The (Honest) Truth About Dishonesty: How We Lie to Everyone—Especially Ourselves. New York, NY: HarperCollins; 2012.

15. Zimmerman M, Mattia JI, Posternak MA. Are subjects in pharmacological treatment trials of depression representative of patients in routine clinical practice? Am J Psychiatry. 2002;(159):469–473.

16. Tishler CL, Bartholomae S. Repeat participation among normal healthy research volunteers: professional guinea pigs in clinical trials? Perspectives in Bio and Med. 2003;46,(4):508–520.